This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

We talk with our IT clients on a regular basis, and also conduct primary research into emerging IT trends. One of the leading trends we hear is the adoption and deployment of cloud native applications, including containers and Kubernetes, and the infrastructure changes required.

The result of IT transformation to using cloud native applications has significant implications for on-premises as well as public cloud environments. With containers and Kubernetes comes new storage interfaces and storage system requirements. The topics of storage and data protection for container-based applications is one of the biggest concerns we’ve heard from our IT clients for several years.

Some of the common questions we hear are:

- What performance can I expect from container native storage?

- How does traditional storage perform with container applications?

- Is there any published performance data comparing storage options?

The storage landscape for containers is evolving rapidly, with the CNCF working to broker common terms along with standards and other aspects needed for interoperability. The standard for storage access by containers is known as the Container Storage Interface or CSI, which enables new and existing storage systems to provide container storage. Evaluator Group has begun using the terminology “Container Native Storage” to describe software defined storage that operates using containers, and “Container Ready Storage” for traditional storage systems that are able to support container applications via a CSI interface.

There are significant differences between different vendors’ container storage platforms in terms of features, complexity, manageability and performance. Providing a CSI driver is the minimum requirement in order to support Kubernetes environments and other considerations should not be ignored. For example, how is storage management performed? Through a CLI only, or using a web service that somehow must be connected to your Kubernetes console? Too many vendors are claiming to provide storage management and metrics merely because a dev/ops person could conceivably access data using Prometheus or Grafana; however, these are cumbersome at best and require significant expertise in order to provide meaningful performance metrics.

In order to gain some hands-on experience with several offerings, we decided to dive into a real-world comparison of several different container storage offerings, evaluating the complexity, management capabilities and performance in particular.

Real World Performance Testing

We recently worked with Red Hat who sponsored a performance study of three leading software defined storage systems being used for container workloads. The goal of this testing was to perform realistic testing in an unbiased way in order to fairly compare the performance of these storage systems.

Note: For details on this test report, please visit RedHat.com

Whenever we contract with a company to provide performance testing, we inform them that the testing is guaranteed but results are not, meaning we don’t know beforehand how any storage system will compare to others. With this understanding, we agreed to utilize a workload generation tool known as “Sherlock” which is able to instantiate and control multiple container instances of specific application workloads. The workload we chose for comparison was the Sysbench, TPC database transaction workload.

Another important factor was the size of the database instances, and the number of instances. While many storage systems may appear to perform well with a few applications, real-world systems require scaling to support multiple applications simultaneously. A common mistake when running performance testing is to test a small workload that is able to fit within the storage systems cache, which does not provide accurate results at scale.

Our tests were designed to utilize approximately 75% of the available storage capacity, which far exceeded the cache of every storage system tested. Some of the test details include:

- Testing using tool to create and run multiple Sysbench TPC workloads

- Scaled Sysbench containers from 3 up to 36 instances

- Each Sysbench instance used 500 GB of storage capacity

- Total utilized capacity was 18 TB of the 24 TB usable storage capacity

- Comparison of three different storage systems using a common workload

- Red Hat OpenShift Data Foundation (a Container Native Storage system)

- Vendor A (a competing Container Ready Storage system)

- Vendor B (a competing Container Native Storage system)

The Container Ready Storage option tested (referred to here as “Vendor A”) has been utilized by Evaluator Group previously, and thus we were quite familiar with setup and optimization. Of the options available, we chose the highest performance configuration available with our hardware; specifically, 4 large, VM instances each on a different node in a cluster, along with a total of 24 NVMe storage devices available via PCIe passthrough.

The other Container Native Storage tested (referred to as “Vendor B”) is a common storage system used in container environments. In order to ensure it was optimized, we worked with their support team to ensure our configuration was optimal for the available hardware. The hardware used was identical for Vendor B and Red Hat ODF, both using 3 dedicated worker nodes in an OpenShift cluster, along with a total of 24 NVMe storage devices.

Finally, it is important to note that we made every attempt to optimize each of the three storage systems tested, using the same hardware wherever possible, along with container access via the CSI interface. Both Red Hat’s OpenShift Data Foundation and Vendor B were Container Native Storage (CNS) and a third Container Ready Storage (CRS) storage, with all being accessed via a CSI interface.

Performance Comparison

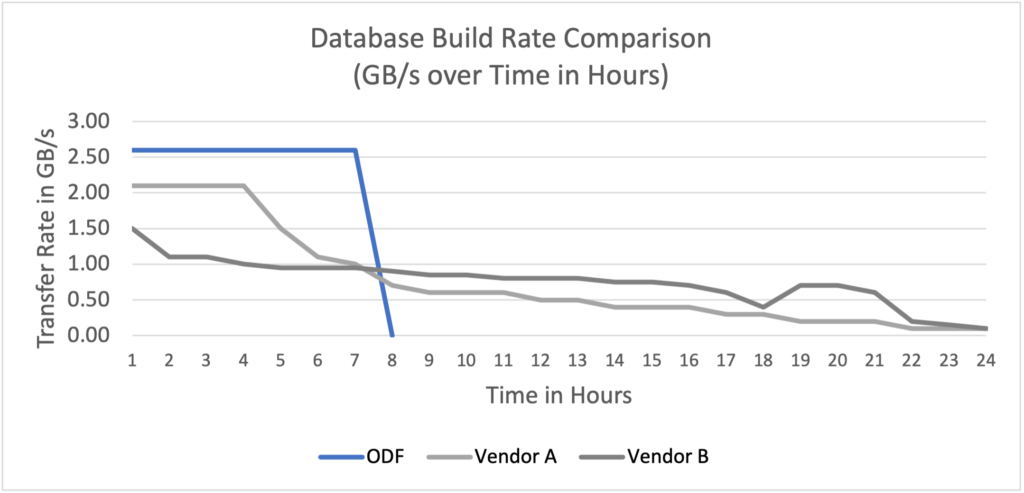

Our results showed significant differences between Red Hat OpenShift Data Foundation (ODF) storage and the two alternatives tested. The most notable difference was the superior performance scalability of ODF, along with its consistent performance over time. The first test conducted was finding the amount of time required to populate 36 database instances with data, for a total of 18 TB. The shorter the time, the better the performance as with all database operations.

Note: For details on this test report, please visit RedHat.com

Here Red Hat ODF outperformed both competitors by approximately 3x, completing in less than 8 hours versus nearly 24 hours for both competitors. See Figure 1 below.

Figure 1: Database Build Time ODF vs. Alternatives (Source Evaluator Group Testing)

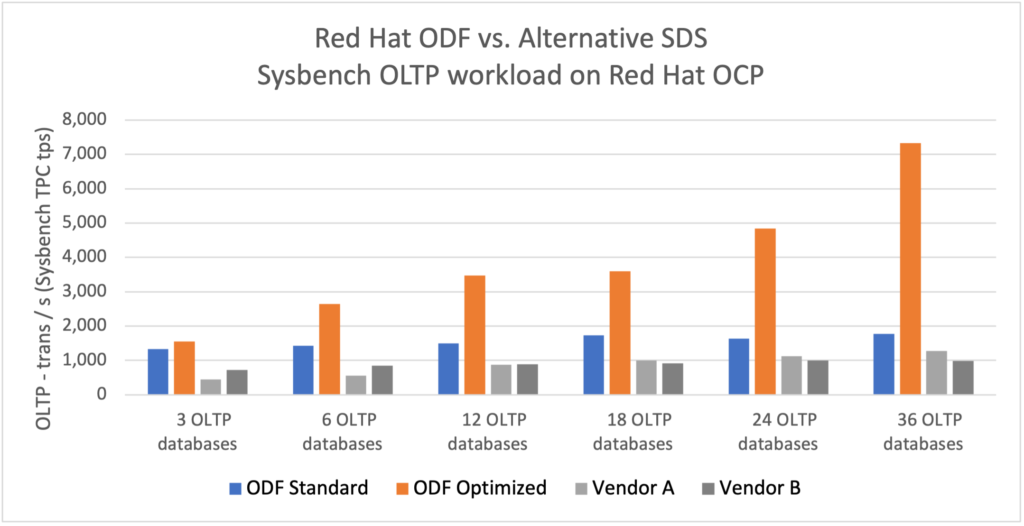

During the actual Sysbench performance testing itself, Red Hat ODF outperformed the two competitors in every instance:

- Using the standard configuration for Red Hat OpenShift Data Foundation while scaling the workload vs. the competitors:

- ODF achieved 1.4x – 3.0x the total TPC transactions per second as “Vendor A”

- ODF achieved 1.8x the total TPC transactions per second as “Vendor B”

- Using an optimized configuration for RH ODF while scaling the workload vs. the competitors:

- ODF achieved 3.5x – 5.7x the total TPC transactions per second as “Vendor A”

- ODF achieved 2.1x – 7.5x the total TPC transactions per second as “Vendor B”

Figure 2: Red Hat ODF Storage Performance vs. Alternatives (Source Evaluator Group Testing)

Final Thoughts

There are many aspects to consider when evaluating storage options for cloud native and container environments. These include ease-of use, manageability, reliability, scalability and other criteria. Undoubtedly, performance is one critical metric that impacts application service levels, and also may have significant cost and architectural implications.

Although our testing was focused on performance, we were also able to make some qualitative judgements on other aspects such as ease of use, manageability along with specific opinions on scalability as it pertained to our scale up performance testing.

We found significant differences between the three container storage platforms tested in terms of their complexity, manageability and performance. Of the three, the traditional storage system with a CSI driver “Vendor A”, provided a readily usable management interface with typical storage management and storage metric information expected from a storage system. However, its integration with Kubernetes was rudimentary and integration with OpenShift in particular was non-existent, apart from the core Kubernetes capabilities.

In contrast, the second competing storage offering, “Vendor B” provided better Kubernetes integration, in part due to its container native architecture. However, its management features and capabilities were very limited and provided only basic information, with limited usefulness. Enabling its management UI was difficult and it provided no integration with OpenShift.

The third option tested was Red Hat OpenShift Data Foundation storage offering, which also utilized a container native architecture to provide storage and data services to containers running on the OpenShift Container Platform. As we had hoped, the integration with OpenShift helped to simplify the configuration and management of ODF storage. Although the metrics provided via the OpenShift console were basic, it did provide a fast and integrated method of monitoring storage. Also, as with Vendor B, additional metrics are possible to obtain via OpenShift’s Prometheus and Grafana facilities.

The biggest differentiator between the three storage options tested was the performance. Providing significantly better and more scalable performance is important for many reasons. Companies are able to support more applications with the same equipment or use less equipment to run the same number of applications, thereby saving on capitol, and /or operational charges associated with the IT resources. Additionally, administrators are able to offer higher application performance levels, or service levels which has cost and value implications for the business. Typically, performance differences are measured in percentages between similarly configured competitors, due to the rather small advantages. Red Hat’s OpenShift Data Foundation’s performance advantages were very large, with more than 5 times the performance of one competitor, and more than 7 times that of another competitor, all utilizing similar hardware.

Clearly, OpenShift Data Foundation should be a consideration for anyone evaluating storage options for cloud native applications running on Red Hat OpenShift compute platform, due to the superior performance, along with better management and other integration benefits.

For details on this test report, please visit RedHat.com