This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

I just recently completed testing a Hyperconverged system using three new technologies:

- Intel Xeon Scalable processor systems

- Intel Optane storage and Intel NVMe Flash

- VMware vSAN 6.6 software

The results showed significant performance enhancements, but more importantly significantly better price / performance.

The Hyperconverged system achieved world-record performance and price-performance results with the Test Report available here and the IOmark certification results here.

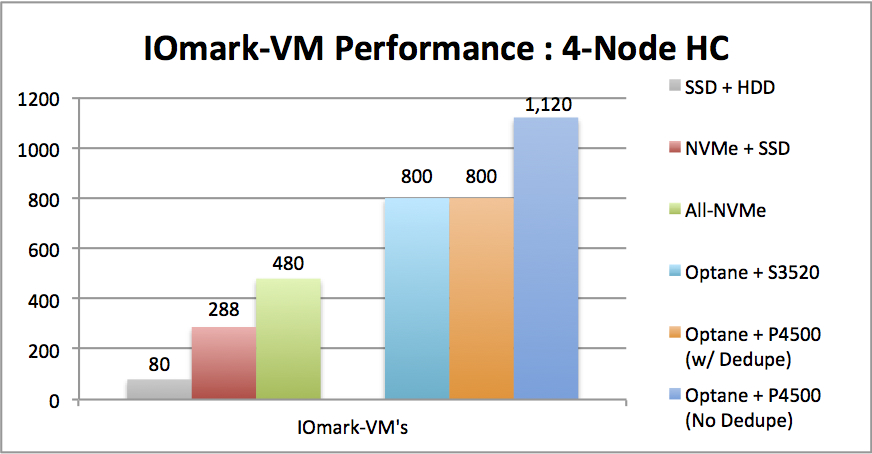

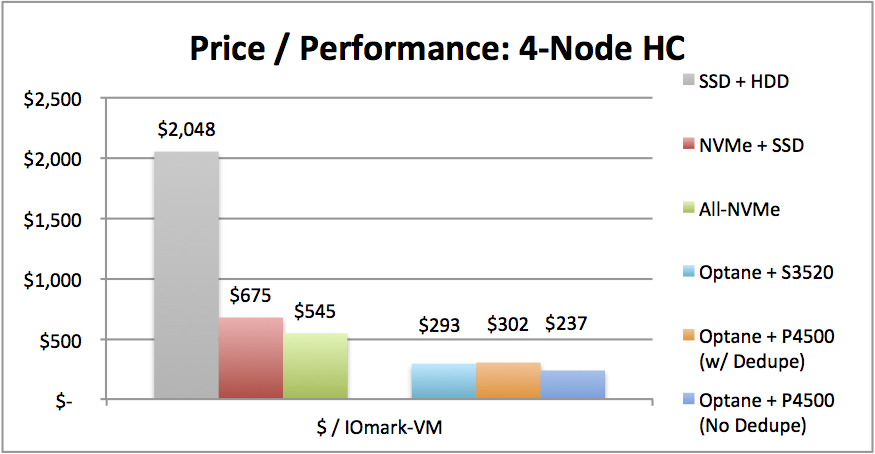

In the chart above, it is clear that Optane has a big impact on performance. The three new configurations on the right all use Optane, the three configurations from last year on the left do not, they use NVMe Flash media. Performance is interesting, but what has value is price / performance, which leads to chart 2 below.

In looking at the data, it is important to remember that lower is better, ie a lower cost per application is better. It is quite clear that storage media has a massive impact on these results. First generation hybrid systems using SSD cache and HDD for capacity are no match for all flash systems, which are no match for NVMe systems, which in turn are no match for Optane systems.

I was excited to be able to share these results, when I just happened to run across a few articles talking about what a disappointment Optane was. How Optane was too expensive given its modest performance benefits. I found these statements to be either uninformed, or more likely the result of not understanding the value of storage in Hyperconverged systems.

These statements remind me of the comments I heard just three or four years ago about how “Flash would never become mainstream because it is just too expensive.” Focusing on the cost of something rather than its inherent value demonstrates a lack of understanding of how IT equipment is utilized and its value in producing results.

The most important question is not what is the cost per Gigabyte, but rather ‘How do you measure the value of storage?’

Certainly many people understand that dollar per gigabyte metrics are an inadequate way to measure storage as it does not include value of the data stored, nor the amount of work being done. Cost per gigabyte is a measure of data at rest, not of data being used. If cost per gigabyte is the metric, then tape is your answer. Thus, cost per gigabyte is not the correct metric for understanding the value of data in a real-time trading application or other time critical processes.

Time has value and improving storage performance can have significant business benefits. Delivering results, measured by the amount of work completed is a much better metric. Fortunately, many in the IT industry now understand this, which helps explain why solid-state storage revenue is growing over 20% annually while remaining enterprise storage revenue is declining.

Some Simple Math

The most recent Intel and vSAN Hyperconverged configuration I tested had an all-in cost of $241,633.57 and achieved a performance of 800 IOmark-VMs at a cost of $302.04 / IOmark-VM. If these were the only data points available they would be interesting, but nothing more. However, IOmark-VM is in fact a benchmark, meaning the results can be directly compared against any other published IOmark-VM results.

Last year we tested several Hyperconverged configurations, including one using Intel’s best performing NVMe device and vSAN 6.2. The all-in price of that configuration was $206,599.84 and achieved an industry best performance of 320 IOmark-VMs at a cost of $645.62.

The math is simple, price / performance is what matters most as long as the cost is within budget. Using new higher performing components (Xeon Scalable processor systems + Optane and vSAN 6.6) we added approximately 17% more cost but in return saw an increase of 150% more work done. That is a business proposition that IT managers, CIO’s and particularly CFO’s will find very compelling.

As an added bonus, the capacity of the new Optane configuration had 4X the usable capacity of last year’s configuration. So if you are stuck measuring the value of storage strictly on $ per Gigabyte basis, you’ll find more than a 3X increase.

What Made This Possible?

Put very simply, a number of technologies had to come together in order to provide these record results. In particular, when evaluating Hyperconverged systems, every aspect of the system is important and must be in balance with the rest of the system. If one aspect is faster than the system can use, that resource is wasted.

Until now, the dirty secret of Hyperconverged was that storage was almost always a significant bottleneck. That is, the CPU and memory were capable of doing more work than the few captive spinning drives with an SSD and some storage software could deliver…

As I said, until now. What changed from just a few months ago is the speed potential of the storage media in server based storage. What changed was the availability of Optane along with server platforms that enable Hyperconverged storage such as vSAN 6.6 to provide industry leading price / performance. The primary component that enabled these performance gains was Optane, along with the ability of the software-defined storage (vSAN 6.6) to utilize these capabilities.

Take Away

It is human nature to believe that expensive products can be substituted without much impact on performance. The idea that using less expensive components that are slower would increase price / performance is logical.

However, it is simply wrong. The most expensive Hyperconverged systems on a cost per VM basis are those with slower storage. The slower the storage, the more expensive the system is when measured on the unit of work, the number of virtual applications supported. The Evaluator Group analysis published last year shows this very clearly with many data points, using published benchmark data.

Claiming “Optane is dead” shows a lack of understanding of the financial value of system performance and how storage impacts systems overall. Opinion without facts is something we hear too often these days.